Lighting

Lighting can be a crucial element of game worlds. Lighting helps players understand the shape and material properties of objects; it creates atmosphere and mood; and it directs player attention.

Examples of the techniques described in this lesson are implemented in the 15-466-f19-base6 code.

By the way, I'm talking about lighting in this lesson and not about shadows; shadows will be discussed in a later lesson.

Light is Additive

Except in rare circumstances not often encountered in game scenes, lighting is additive. For direct lighting (which we're going to focus on this lesson), this means that the game must sum the contribution of every light in the scene at every pixel on the screen.

image[xy] = sum(shade(closest_surface[xy], light[l]));The lighting techniques I discuss in this lesson all boil down to different ways to compute this sum.

Multi-pass: slow and old

The classic (late 80's, early 90's) method of rendering multiple lights is to render the scene once per light, summing the contributions:

glClearColor(0.0, 0.0, 0.0, 0.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnable(GL_DEPTH_TEST);

glDisable(GL_BLEND);

glDepthFunc(GL_LESS);

//set up pipeline state for first light:

set_light(lights[0]);

//draw the scene:

draw_scene();

//add the remaining lights:

glEnable(GL_BLEND); //(A)

glBlendFunc(GL_ONE, GL_ONE);

glBlendEquation(GL_FUNC_ADD);

glDepthFunc(GL_EQUAL); //(B)

for (uint32_t i = 1; i < lights.size(); ++i) {

set_light(lights[i]);

draw_scene();

}

A full, working, example of multi-pass rendering is implemented in DemoLightingMultipassMode, part of the lighting example code.

I've marked this code as bad code because multi-pass rendering like this is not very efficient. Why? Well, let's do a back-of-the-envelope complexity computation.

Let's start with a few variables:

- \(L\) lights in the scene

- \(V\) vertices in the scene

- \(P\) pixels in the output framebuffer

- \(F\) fragments shaded when rendering the scene starting from a cleared depth buffer (like for light 0)

- \(F'\) fragments shaded when starting from a full depth buffer (like for lights 1 .. \(L-1\))

- \(B\) bytes per pixel in the framebuffer

Notice that \(F' \le F \le P \), because some fragments may be hidden by closer fragments, and there can't possibly be more frontmost fragments than there are pixels in the framebuffer.

With these values in place we can compute four quantities of interest which we can compare with other methods:

| Vertices Shaded | Lighting Computations | Framebuffer Bytes Read | Framebuffer Bytes Written | |

|---|---|---|---|---|

| Multi-pass | \(L V\) | \(F+(L-1)F'\) | \( B(L-1)F' \) | \( BF + B(L-1)F' \) |

Forward Rendering

Multi-pass light rendering came about because ye olde fixed-function GPUs often could only render with a few lights at once. With modern GPUs we can easily write a fragment shader to loop over as many lights as we'd like.

glClearColor(0.0, 0.0, 0.0, 0.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnable(GL_DEPTH_TEST);

glDisable(GL_BLEND);

glDepthFunc(GL_LESS);

//set up pipeline state for all lights:

set_lights(lights);

//draw the scene:

draw_scene();

You can see how this works in practice in DemoLightingForwardMode. Particularly, notice how lighting information is passed in arrays to BasicMaterialForwardProgram (contrast this to the single light in BasicMaterialProgram, used by the multi-pass example).

Of course, we could further refine this technique by doing a sort of lights-to-objects in order to avoid shading objects with lights that are too far away to matter. But as a first cut, we've already saved a ton of computational resources:

| Vertices Shaded | Lighting Computations | Framebuffer Bytes Read | Framebuffer Bytes Written | |

|---|---|---|---|---|

| Forward | \( V \) | \( L F \) | \( 0 \) | \( B F \) |

Deferred Rendering

Notice that the computational cost of forward rendering generally scales with the number of lights, even if those lights aren't influencing very many pixels. For example, consider a space ship with hundreds of small running lights on its hull. Even though every light only influences a tiny part of the ship's hull, the engine needs to send information about all of those lights to the GPU if it wants to draw the ship as a single object, or divide the ship into smaller sections and do a lot of on-CPU work to assign the lights to each section they influence.

Deferred rendering is a method that uses rasterization on the GPU in order to sort lights to pixels. It does this by first rendering information about the scene's geometry and materials to a set of offscreen buffers (a "geometry buffer" or "g-buffer"), then rendering the lights as meshes that overlap every pixel the light illuminates. (Note that lights actually contribute illumination to every object that can see them, but the amount of this contribution falls off as the inverse square of the distance to the light, and -- as such -- there is a practical "outer radius" past which a light's contribution becomes negligible.)

Aside on light geometry: how do you make geometry that covers all pixels that a light (might) contribute to? Depends on the light. For a point light, you'd probably use a sphere centered on the light; for a spot light, you'd probably use a cone. For a sky (hemisphere) light or a distant directional light, you'd probably just render a full-screen quad, because the these lights potentially touch every pixel in the scene.

//--- draw scene geometry to gbuffer ---

glBindFramebuffer(GL_FRAMEBUFFER, gbuffer.fb);

glClearColor(0.0, 0.0, 0.0, 0.0);

glClear(GL_COLOR_BUFFER_BIT | GL_DEPTH_BUFFER_BIT);

glEnable(GL_DEPTH_TEST);

glDisable(GL_BLEND);

glDepthFunc(GL_LESS);

//store scene positions, normals, albedos:

draw_scene_geometry();

//--- accumulate lighting to screen --

glBindFramebuffer(GL_FRAMEBUFFER, 0);

//NOTE: assume that the depth buffer from gbuffer.gb has been copied to the

// main (i.e., framebuffer 0) depth buffer. In practice, a second framebuffer

// with a shared depth attachment would be used to avoid this copy.

glEnable(GL_DEPTH_TEST);

glDepthMask(GL_FALSE); //(A)

glEnable(GL_BLEND);

glBlendFunc(GL_ONE, GL_ONE);

glBlendEquation(GL_FUNC_ADD);

//make gbuffer information available to the shaders used to compute lighting:

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_RECTANGLE, gbuffer.position_tex);

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_RECTANGLE, gbuffer.normal_tex);

glActiveTexture(GL_TEXTURE2);

glBindTexture(GL_TEXTURE_RECTANGLE, gbuffer.albedo_tex);

//draw lights as meshes that will overlap all pixels the light influences:

draw_scene_lights();

glActiveTexture(GL_TEXTURE2);

glBindTexture(GL_TEXTURE_RECTANGLE, 0);

glActiveTexture(GL_TEXTURE1);

glBindTexture(GL_TEXTURE_RECTANGLE, 0);

glActiveTexture(GL_TEXTURE0);

glBindTexture(GL_TEXTURE_RECTANGLE, 0);

glDepthMask(GL_TRUE); //(B)

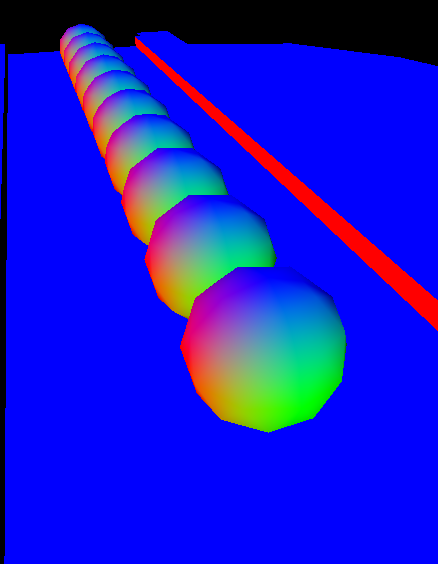

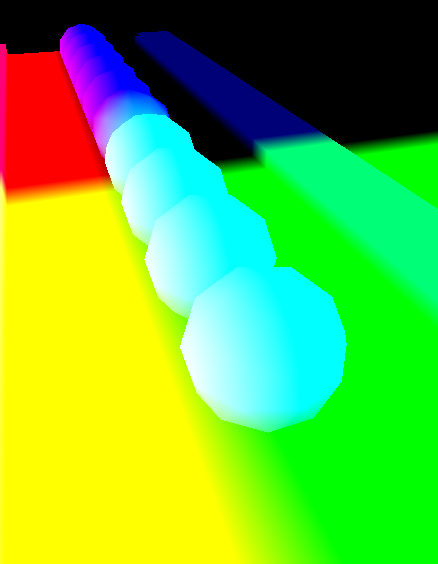

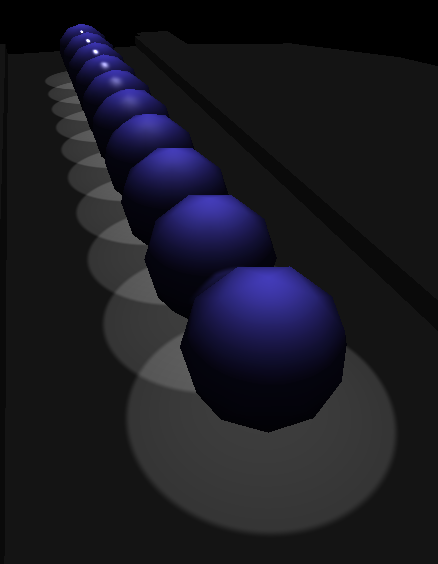

In the lighting demo code, you can press keys 1-4 to show the different components of the geometry buffer:

In order to analyze the complexity of deferred rendering, we need to define more variables:

- \(V_L\) vertices drawn in light meshes

- \(F_L\) fragments drawn by light meshes

- \(B_G\) bytes per pixel in the g-buffer

Vertices Shaded Lighting Computations Framebuffer Bytes Read Framebuffer Bytes Written Deferred \( V + V_L \) \( F_L \) \( B_G F_L \) \( B F_L + B_G F \)

Going Further

The discussion of lighting thus far has focused on direct lighting: light leaves a light source, hits a surface, and bounces to the viewer. A lot of the light we see in our environments is, in fact, indirect lighting: light that hits more than one surface before it gets to the viewer.

Indirect lighting is a fair bit more complex to compute, and -- until the last ten years or so -- was generally precomputed ("baked") and stored. These methods are still quite useful, and fall into two categories (which aren't actually mutually exclusive):

- Lightmaps -- textures which store information about incident light at every location in the level. These may be 2D textures in a second UV space (since you need unique lightmap data for every location on an object); or 3D textures [generally with some sparse structure, since large dense 3D textures are quite heavy]. Historically, lightmaps have been used to store both direct and indirect lighting (see: Quake), though their low resolution means that a different solution is often used for direct lighting in modern games.

- A Light Probe captures all of the light passing through a given point in a scene. An array of light probes can be used to approximate the light passing through every point, everywhere in a scene -- basically, storing \( L_o \) from the rendering equation. If direct illumination is disregarded, light probes can be a good way to store indirect illumination; and can even be re-rendered in order to provide real-time updates. (Higher-resolution light probes are also great for simulating approximate reflections.)

In modern graphics hardware that supports ray-tracing acceleration, ray-tracing may instead be used to compute indirect illumination. (Or even, in very modern hardware, direct illumination -- though direct illumination generally requires a lot of rays for good results.)

Conclusion

I've talked about how to structure direct lighting computation, but -- at least in high-end games and real-time graphics -- lighting computation is not a one-size-fits-all proposition. Forward and deferred rendering have different places in game engines and they will probably continue to jockey for supremacy as pixel counts and graphics hardware memory sizes change -- indeed, engines may use forward rendering for most things but do a 1/2 or 1/4 size deferred rendering (or depth-only) pass in order to support certain specific effects (screen-space ambient occlusion, reflections, or atmospheric effects). Direct illumination may be real-time, with indirect illumination stored in a lightmap and reflections computed by mixing light-probes and ray-traced reflection effects.

To get a feeling for how much complexity one can add to rendering system, check out this excellent graphics study of DOOM 2016. Of particular note is how Doom uses forward rendering with a light list, but pre-sorts lights into camera-relative spatial bins to save on computation.